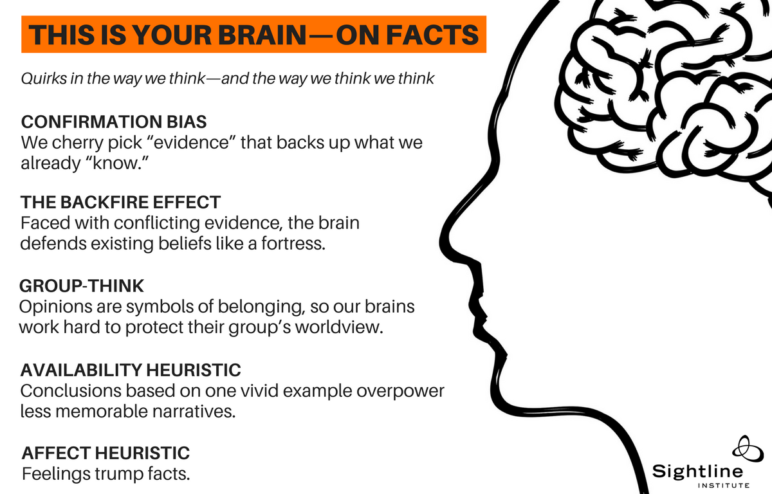

If you were watching TV in the US in the late 1980s, you’ll probably remember the anti-drug ads with the egg—“this is your brain”—and then the egg cracked into a sizzling hot frying pan—“this is your brain on drugs.” But if neuroscience and psychology and behavioral economics tell us anything, it’s that the human brain scrambles itself—no drugs required! Dozens of cognitive biases—all well studied—mean good old homo sapiens is not as wise—or rational or objective—as we’ve cast ourselves to be. Unconscious mental shortcuts, ingrained social survival impulses, and evolutionary glitches complicate how we evaluate new information, form opinions, gauge risk, or change our minds.

And I mean all of us. Don’t forget that rascally blind spot bias—where we tend to notice others’ flaws in reasoning far more readily than seeing them in ourselves.

As we humans seem to careen toward an epistemological precipice sped along by intense partisanship, it’s worth reviewing some of the most powerful tricks our own brains play on us.

Confirmation bias: We cherry pick “evidence” that backs up what we already “know”

Consider the news sources you trust compared to places your politically opposite uncle reads. You each think the other is spouting fake news. But both of you—consciously and unconsciously—seek out information that supports your existing beliefs and ignore or reject information that contradicts it. And it’s not just looking for proof that we are right; information we deem credible, how we interpret it, and what we remember also serve existing convictions over new ones and protect us from having to admit—even to ourselves—that we were wrong.

The backfire effect: Faced with conflicting evidence, the brain defends existing beliefs like a fortress

Think of your belief system as a house—but not just any house, this is the very structure that your identity, your worldview, your common sense, your self calls home! When new evidence threatens to destroy even one building block of our house, we build up defenses in order to keep the whole thing from falling down. When someone challenges our preconceptions we may very well dig in our heels. And this is only partly metaphor. Ask a neuroscientist and they’ll tell you that beliefs are physical, established in the very structure of our brains. “To attack them is like attacking part of a person’s anatomy.” (Do not miss The Oatmeal’s explanation of the backfire effect!)

Group-think: “When opinions are symbols of belonging, our brains work overtime to keep us believing”

That’s how Dan Kahan, Yale law and psychology researcher, describes group-think. Our affinity groups go a long way to define who we are and what we think. People around us give us confidence we’re right because we all agree. Again, our identity depends on upholding and protecting the group’s worldview. It’s the backfire effect all over again. We’d rather justify our strongly held beliefs than change our minds or fly in the face of our group’s norms. And just like confirmation bias, this is a kind of “identity-protective cognition.” Science writer Chris Mooney explains:

Our political, ideological, partisan, and religious convictions—because they are deeply held enough to comprise core parts of our personal identities, and because they link us to the groups that bulwark those identities and give us meaning—can be key drivers of motivated reasoning. They can make us virtually impervious to facts, logic, and reason.

Pro tip: If you’re trying to change people’s minds, consider messengers from within their trusted social group. (See also: In-group bias and false consensus bias.)

Availability heuristic: False conclusions based on one vivid example overpower less memorable narratives

What comes to mind most readily can shape our thinking. For example, a few high-profile murder cases stick in our mind and may drown out less flashy statistics about declining violent crime rates in our city. We tend to jump to conclusions based on the incomplete information that stands out in our minds. “The problem is that too often our beliefs support ideas or policies that are totally unjustified,” says author and researcher Steven Sloman.

Affect heuristic: Feelings trump facts

Tugging at heartstrings? Going for the gut? Commercial marketers, political campaigners, and psychologists know this one well: the tendency to make decisions based on emotion, not facts. The brain is emotional first (system one, the fast, automatic response), analytical later (system two, the slower more thoughtful process). But the systems aren’t disconnected. A network of memories, associations, and feelings “motivates” our system two reasoning, making objective judgement elusive. According to Drew Westen, psychologist, political consultant, and author of The Political Brain, the brain on politics is essentially the brain on drugs. In fact the same chemicals are in play. Positive emotions are related to dopamine (a neurotransmitter found in rewards circuits in the brain) and inhibition and avoidance are associated with norepinephrine (a close cousin of the hormone adrenaline, which can produce fear and anxiety). In his research, the brain function of partisans sought good chemicals and avoided bad ones.

All this is to say that facts aren’t a magic serum for changing minds. In fact, pouring on more facts can have the opposite effect, entrenching people’s existing beliefs. You knew that. But it’s good to review. Perhaps if we stop more often to think about how we think, we’ll be better equipped to venture out of our own echo chambers, find empathy and understanding rather than fanning the flames of polarization, and map a bit more common ground.

Comments are closed.